prompting

scroll ↓ to Resources

Contents

Note

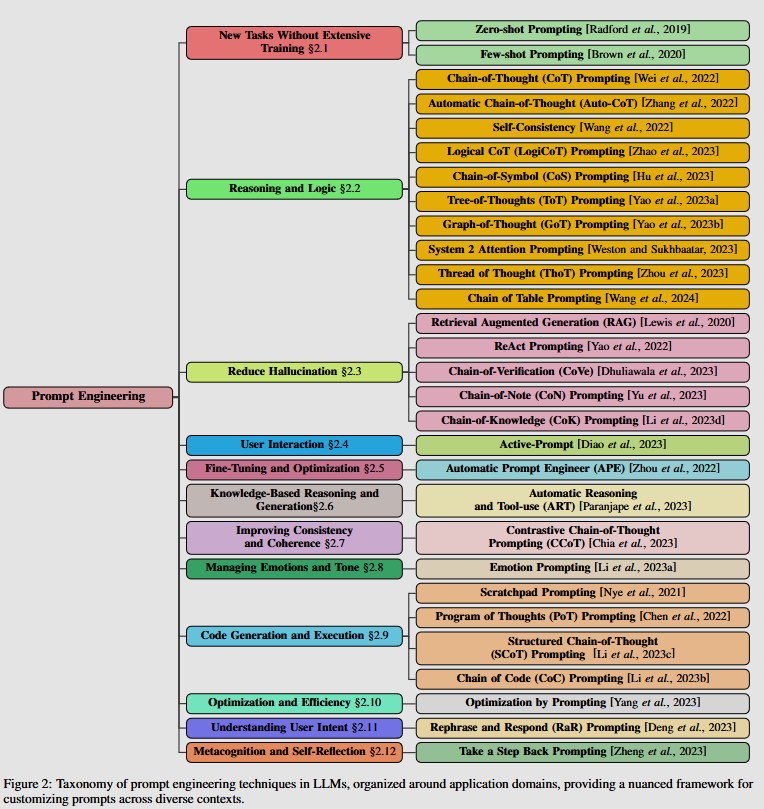

Extensive list of prompting methods from ^44dbf0

Examples

- zero-shot learning

- few-shot learning

- append sample task-answer examples to the prompt

- Many-Shot In-Context Learning shows how the results are impacted if the prompt is filled with 2000+ examples. Though, consider the costs of such prompting technique and difficulty of generating a prompt itself.

Reasoning

- Chain-of-Thought - add let’s think step by step to your prompt

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

- schema-guided reasoning is a way to restrict non-reasoning model to follow certain determined checklist

- self-consistency + CoT - generate several different LLM outputs to the same prompt, take the most often answer

- Tree-of-Thought - iteratively generate different candidate outputs, pick the best and add them back to the tree of potential solutions

- Graph-of-Thought - Graph of Thoughts: Solving Elaborate Problems with Large Language Models

- Reasoning with Large Language Models, a Survey

Other

- meta prompting

- ask the model to improve own answer

- algorithmic prompt optimizations

- User-context prompting with RAG

- Role-specific prompt

- You are an expert…

Resources

- An Empirical Categorization of Prompting Techniques for Large Language Models: A Practitioner’s Guide

- A Systematic Survey of Prompt Engineering in Large Language Models: Techniques and Applications

- Prompt Engineering Guide

Transclude of base---related.base

Links to this File

table file.inlinks, filter(file.outlinks, (x) => !contains(string(x), ".jpg") AND !contains(string(x), ".pdf") AND !contains(string(x), ".jpeg") AND !contains(string(x), ".png")) as "Outlinks" from [[]] and !outgoing([[]]) AND -"Changelog"