regularization

scroll ↓ to Resources

Contents

- Note

- Regularization for shallow models

- [[#Regularization for shallow models#Methods|Methods]]

- [[#Methods#Lasso (L1)|Lasso (L1)]]

- [[#Methods#Ridge|Ridge]]

- [[#Methods#ElasticNet|ElasticNet]]

- [[#Regularization for shallow models#Methods|Methods]]

- Regularization for deep neural networks

- Resources

Note

- regularization is used to regulate model complexity and help fight overfitting or multicollinearity

- it leads to increasing the error on the training set and decreasing the error on the validation set

- there are methods, which modify the loss function and the ones, which modify data like data augmentation

Regularization for shallow models

- normalization is required for most algorithms

Methods

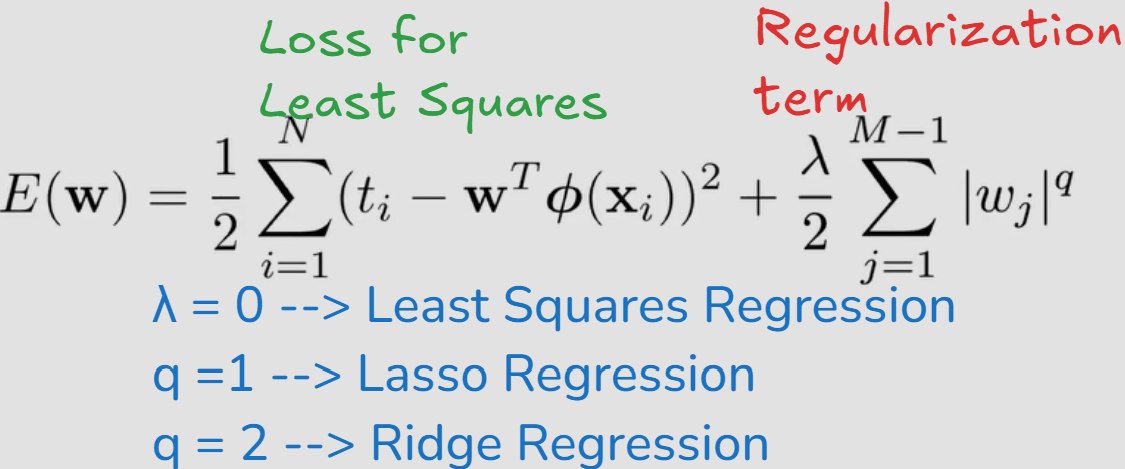

- λ is a hyperparameter and needs to be adjusted from experiments

- Minimizing the sum of two functions

Lasso (L1)

- Lasso (L1) - punishes non-zero coefficients ⇒ some coefficients go to 0

Ridge

-

Ridge (L2) - punishes large coefficients ⇒ makes model robust to small changes in input data, well differentiable

-

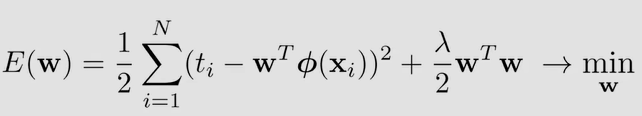

Minimization task becomes a sum of the loss function and the squared weights:

-

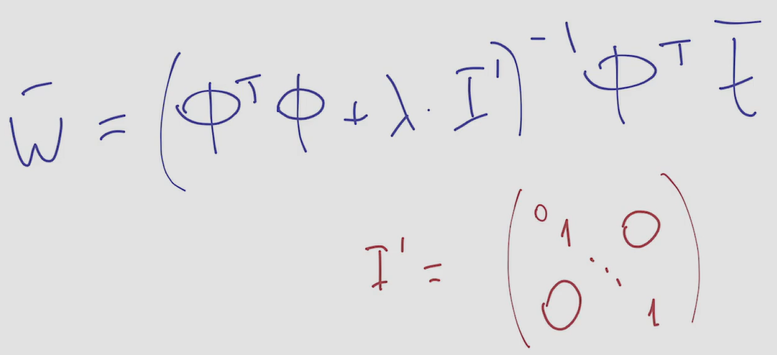

Minimizing the above yields the solution for weights w:

ElasticNet

- ElasticNet (L1+L2)

Regularization for deep neural networks

Resources

Cheat sheet