Inference Scaling for Long-Context Retrieval Augmented Generation

scroll ↓ to Resources

Note

- investigate how performance scales with increasing magnitude of the inference compute?

- they consider 2 advanced RAG modifications

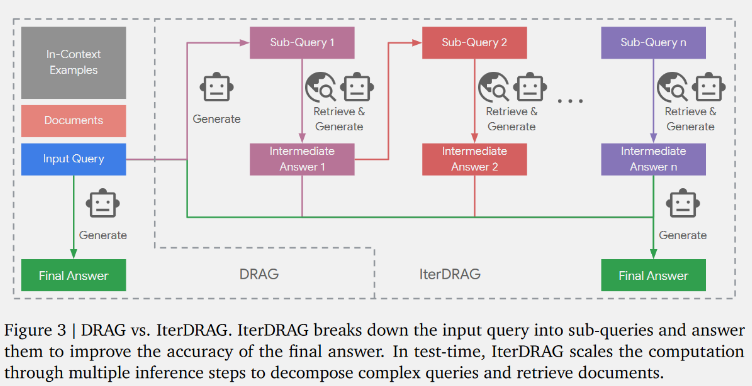

- Demonstration RAG (DRAG) combines RAG with few-shot examples; its inference compute scales with both number of documents and number of queries

- Iterative Demonstration-Based RAG (IterDRAG)

- Decomposes the query into simpler sub-queries.

- For each sub-query, performs retrieval and uses fetched context to generate intermediate answers.

- After all sub-queries are resolved, the retrieved context, sub-queries, and their answers are combined to synthesize the final answer.

Resources

Links to this File

table file.inlinks, filter(file.outlinks, (x) => !contains(string(x), ".jpg") AND !contains(string(x), ".pdf") AND !contains(string(x), ".png")) as "Outlinks" from [[]] and !outgoing([[]]) AND -"Changelog"