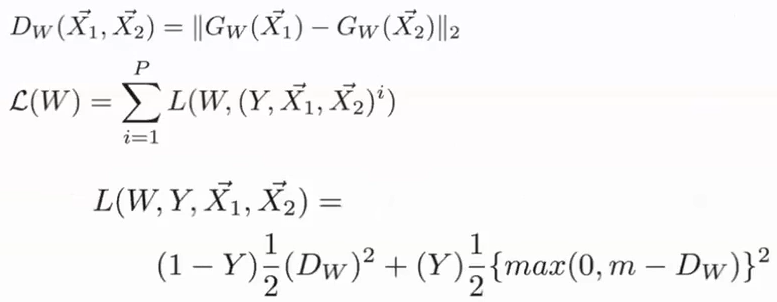

contrastive loss

scroll ↓ to Resources

Note

- used for object retrieval applications

- an outdated loss (from 2006) still used in academic papers to show that model improvement is achieved by the algorithm and not a better loss

- asking the model to make such representations of input objects and that

- if same class: as close together as possible

- if different class: at least units apart in their embedding space

- and are input objects (images)

- is their vector representation after passing through the neural network

- is the distance between vector representations

- is the aggregate loss over pairs of (, ) and algorithm parameters and label (0 if and belong to the same class, 1 otherwise)

- for each pair consists of two parts, but one of them is always 0 because of either Y=0 or 1-Y=0

- when Y=0 (same class)

-

- we want to minimize the loss, that is we want that objects from the same class closer to each other

- the model will try to update the gradients for each pair of , until their representations do not equal (this will never become true)

-

- when Y=1 (different classes)

-

- we want that objects of different classes to be at least units away from each other

- as long as the is the model weights for this pair of , are not updated (model is satisfied)

-

Resources

Links to this File

table file.inlinks, file.outlinks from [[]] and !outgoing([[]]) AND -"Changelog"