layer normalization

scroll ↓ to Resources

Note

- Each layer in a transformer, consisting of a multi-head attention module and a feed-forward layer, employs layer normalization and residual connection.

- belongs to regularization for neural network, which help control overfitting by keeping the values flowing through the network from getting too big or too small.

- The layer norm step learns to adjust the values coming out of a layer so that they approximate the shape of a Gaussian with a mean of 0 and standard deviation of 1.

- The layer norm step learns to adjust the values coming out of a layer so that they approximate the shape of a Gaussian with a mean of 0 and standard deviation of 1.

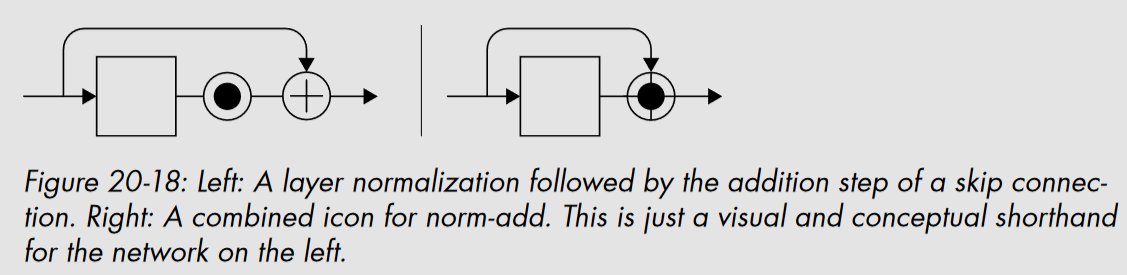

- A popular approach places the layer norm just before the addition step of a skip connection. Since these two operations always come in pairs, it’s convenient to combine them into a single operation that we call norm-add.

- see also batch normalization

- requires initialization

Resources

Links to this File

table file.inlinks, file.outlinks from [[]] and !outgoing([[]]) AND -"Changelog"