Gated Linear Unit

Note

- Gated Linear Units [Dauphin et al., 2016] consist of the component-wise product of two linear projections, one of which is first passed through a sigmoid function. Variations on GLU are possible, using different nonlinear (or even linear) functions in place of sigmoid.

- Gated means that there is a component responsible for letting signal through. Most often that is achieved by multiplying a signal by a number from 0 to 1.

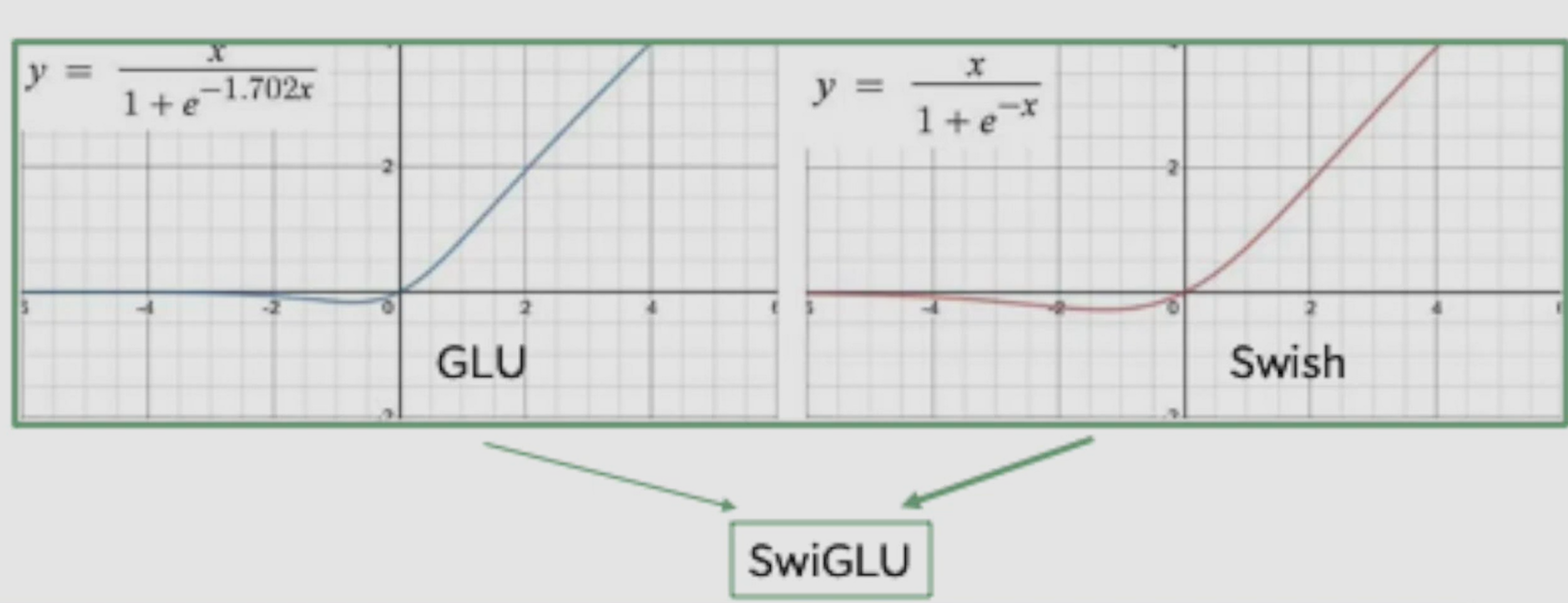

SwiGLU

- used in paper review - Llama 3 Herd of Models as well as Llama 2

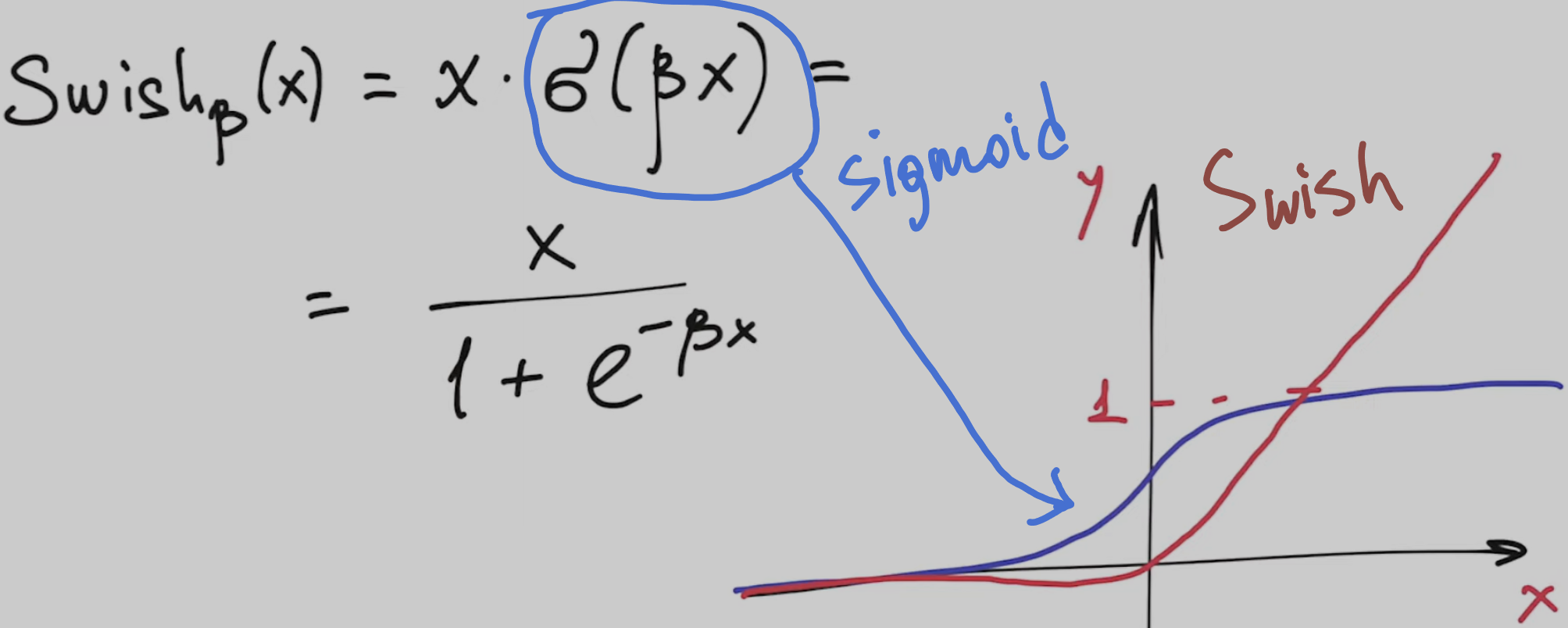

- is a combination of GLU and Swish

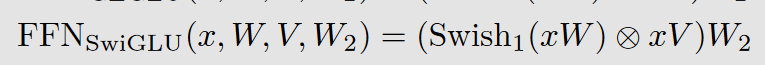

- swish with component-wise multiplication of another feed-forward layer

Why does it work?

We offer no explanation as to why these architectures seem to work; we attribute their success, as all else, to divine benevolence.

Links

- https://blog.paperspace.com/swish-activation-function

- https://medium.com/@neuralnets/swish-activation-function

- SwiGLU Explained | Papers With Code

Resources

Links to this File

table file.inlinks, file.outlinks from [[]] and !outgoing([[]]) AND -"Changelog"